AUTHORS

Massimiliano Cimnaghi

Director Data & AI Governance

@BIP xTech

Israel Salgado

Data Scientist Expert

@BIP xTech

Generative AI: An Overview of its Potential and Threats

The term “Generative AI” refers to various machine learning models that are capable of generating original contents, such as text, images, or audio. These models are trained on large amounts of data, that allow the users to create materials that appear novel and authentic by providing a precise and concise input (named “prompt”).

Large language models (LLMs) are a widely used type of Generative AI models that are trained on text and produce textual content. One prominent example of textual Generative AI application is ChatGPT, a chatbot developed by the AI company OpenAI, that can answer user’s questions and can generate a variety of text contents, including essays, poems, or computer code based on instructions expressed by the users in natural language.

Differently from traditional AI models that are typically customized to execute specific tasks, LLMs can be used for different purposes if properly tuned. The same model can for instance be used for text summarization and question answering. To adapt LLMs to a specific context it is essential to fine-tune the model on proprietary data related to a business-specific tasks. For instance, if a company wants to use LLMs to improve its legal department, models must be tuned with company’s legal documents.

In this early stage of its life, Generative AI has revealed tremendous potential for business and society. Fast content creation can allow companies to save significant amount of business time and resources. Generative AI can be used to power some services, like customer support, improving user experience and reducing labour costs. The fact that these models can be adapted, with proper tuning, to different applications and industries enhances their impact on a greater degree. However, if left unchecked or unproperly used, such AI solutions can also pose significative risks. The potential positive disruption of Generative AI, made of efficiency gains and time savings, may be counterbalanced by risks that should not be underestimated: for this reason, we need to speak about Responsible AI.

Main Sources of Risk for Generative AI

Many challenges and uncertainties may arise in the deployment, in business contexts but not only, of Generative AI tools.

From our point of view, some of the most significative sources of risk within the domain of Generative AI are:

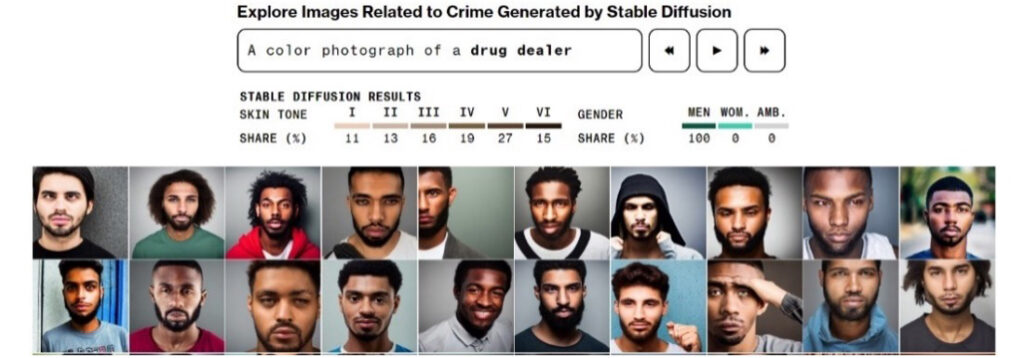

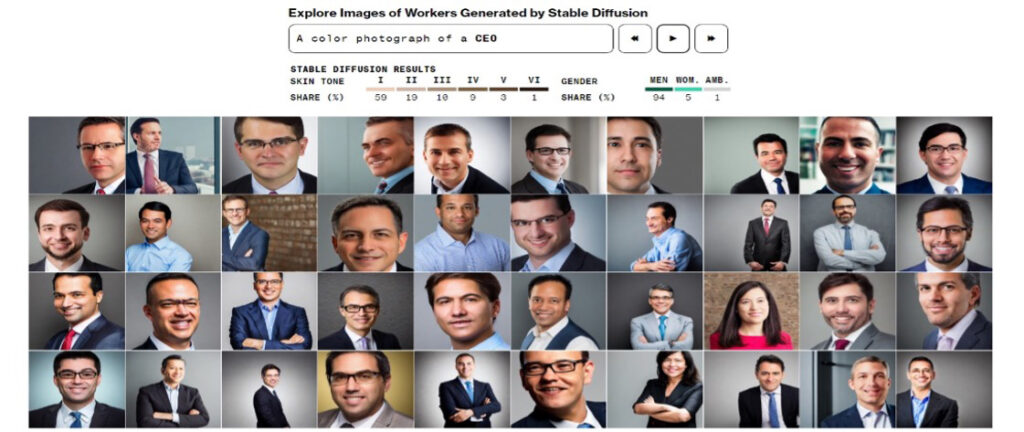

Biases and discriminations embedded in the data used to train the model could possibly be transferred into the content generated by the AI model, hence a Generative AI application could produce results that are discriminatory or offensive for groups of individuals. Many examples of this behaviour have been collected. For instance, Bloomberg showed in an interactive article how one of the most used AI tools for image generation amplified stereotypes about race and gender[1].

Many Generative AI tools can generate new, fictional information (for example inventing events that have never taken place) and presenting it as real world, truthful facts. These misleading outputs can cause significant harm to users and third parties. For instance, this could be the case of a financial advice generated by a Gen AI application, based on facts and figures invented by the application itself. During a demo in front of the press, Bing AI, a chatbot created by Microsoft, analysed earning reports from GAP Inc., a clothing retailer. Some information produced by Bing AI appeared to have been made up[2].

Generative AI can produce harmful content in various forms, including hate speech, violent contents, and discriminatory material. This could happen because the model is trained with data representing negative, hateful, or violent behaviours and ideas. The ethical implications are self-evident, since this could pose serious harm to individuals or groups of individuals. Developers or users of such models could face significant reputational risks, too.

One of the most striking features of Generative AI models is their ability to create fictional contents that appear as realistic, and sometimes even apparently human-made. For this reason, they could be used as the perfect engine to power disinformation campaigns on large scale, deceiving a vast number of individuals with fabricated political, social, or economic messages in order to influence their decision process. This is for example what happened in March of 2023 when AI-generated photos of Donald Trump arrest begun to circulate the web[3].

Issues related to the infringement of intellectual property can arise in many phases of the lifecycle of a Generative AI model.

During the development, any data protected by intellectual property should be used to train the model only under proper licencing, otherwise there are risks of legal disputes, and dire financial consequences. A notable example is the lawsuit filed by Getty Images against Stability AI, a company that according to the accusations has allegedly used millions of images protected by copyright to train its image-generating AI model[4]. Also, Generative AI models may use the inputs provided by the users to further expand their training data sets. An unexperienced user may imprudently share, in the performance of work-related tasks, confidential information or information that constitute intellectual property of the company.

On the other hand, the information produced by a Generative AI model can infringe intellectual property rights: for instance, a model for image generation could create images and logos that are already commercially used by another party, or an AI chatbot could generate text that has been already published.

Generative AI models could be exposed to personal information due to various reasons, for example because this information is a necessary input to train the model or because a model “captures” information from its users. Handling personal information is always a sensitive topic, especially in the case of personally identifiable information (PII) – that is, the information elements that can be used to identify, locate, or contact a specific individual.

It is paramount to ensure the proper handling of personal information by a Generative AI model: including personal information, especially PII, in the training set of the generative model could constitute a compliance issue and have legal consequences. It could even be more unfavourable if PII are included in the output of the model and shared in an uncontrolled way.

When it comes to cybersecurity, there are different risks that can arise due to the deployment of Generative AI models.

The term “Prompt Injection” refers to a specific new type of Generative AI vulnerability that consists of introducing a malicious instruction into the prompt. In some cases, this injection could cause a disclosure of sensitive information, such as a secret key or private data.

Moreover, these models could be used as tools to craft more efficient and effective cybersecurity attacks, phishing schemes, or social engineering tactics. Another potential case of cybersecurity risk arises when these models are used to support code writing in an organization: the code produced by Generative AI models can potentially introduce security vulnerabilities into the underlying codebase if the organization does not ensure the proper security checks. This is for example what happened in early 2023, when a prompt injection attack was used to discover a list of statements that governs how Bing Chat interacts with people who use the service[5].

Responsible AI

Considering the intrinsic risks of Generative AI, a governance approach based on the concept of Responsible AI seems to be of essence for every company that aims to explore and benefit from the potential of Generative AI.

In the article “AI Governance & Responsible AI: The Importance of Responsible AI in Developing AI Solutions” (BIP Group UK, 2023), a comprehensive definition was provided: “Responsible AI provides a framework for addressing concerns and mitigating the negative impacts that AI systems may have on individuals, communities and as an unintended consequence, the businesses that develop and apply them. It encourages the adoption of ethical principles and best practices throughout the AI lifecycle, including data collection and management, algorithm design and development, model training, deployment, and ongoing monitoring.”

It emerges how Responsible AI is a multidisciplinary topic, which involves business, technical, ethical, and legal aspects. To introduce a schematic representation, in BIP we have identified the following principles:

- Fairness: AI systems should treat all people fairly, equitably, and inclusively.

- Transparency and explainability: Users should have a direct line of sight to how data, output and decisions are used and rendered, and when they are interacting with an AI model.

- Privacy: Understand how data privacy preserving is the actual model (e.g., robust to data leakage), not only the data set, particularly in the large Gen AI models.

- Resilience: Models should be able to deliver consistent and reliable outputs over time, by establishing end-to-end monitoring of data, model deployment, and inference.

- Sustainability: Models should adapt to latest technologies and flexibility to connect to any new environmental needs (e.g., reduce CO2).

- Contestability: especially when an AI system can affect individuals and communities, the possibility to intervene and challenge the outcomes of the AI system should be granted to individuals, to allow them to exercise an active oversight over the model.

- Accountability: Owners of AI systems should be accountable for negative impacts. Independent assurance of systems should be enforced.

Upcoming regulatory requirements

The significant impact on society and economy of such a ground-breaking innovation has attracted the attention of lawmakers all around the world, and many institutions are promoting specific regulatory frameworks, to balance the promotion of innovation with the need to protect the safety and fundamental rights of citizens and society.

The EU AI Act, the proposed regulation of the European Union, is expected to be the world’s first comprehensive AI law.

The proposed regulation is the result of years of preparatory work, with notable milestones like the “Ethics Guidelines for Trustworthy AI”[6], made public in 2019 by the European Commission to promote its vision of “ethical, secure and cutting-edge AI made in Europe”. The Guidelines set a list of four ethical principles, rooted in the EU Charter of Fundamental Rights:

- Respect for human autonomy: AI systems should respect human rights and not undermine human autonomy, and particular importance should be given to proper human oversight.

- Prevention of harm: AI systems must prioritize the avoidance of harm and the protection of human dignity, mental and physical well-being, and should be technically secure to prevent malicious use.

- Fairness: The development, deployment and use of AI systems must be fair, ensuring equal and just distribution of both benefits and costs, and ensuring that individuals and groups are free from unfair bias, discrimination, and stigmatisation.

- Explicability: This means that processes need to be transparent, the capabilities and purpose of AI systems openly communicated, and decisions – to the extent possible – explainable to those directly and indirectly affected.

Based on these contributions[7], the EU AI Act seeks to ensure that AI systems placed on the Union market and used are safe and respect existing law on fundamental rights and Union values, while facilitating the development of a single market for lawful, safe, and trustworthy AI applications.[8]

At the time of writing this document, the regulation is in the final stage of negotiation, and a possible date for its approval is expected to be in 2023. Regulatory compliance will be an obligation for the organizations that aspire to explore the advantages of AI, including Generative AI, for businesses operating within any of the twenty-seven countries that make up the European Union. In case of infringement of the prescriptions of the EU AI Act, companies may incur in penalties up to the 30 million EUR or 6% of its total worldwide annual turnover for the preceding financial year, whichever is higher[9].

The European Union is not the only political institution focusing the attention to regulation of Artificial Intelligence.

Similarly, the UK Government has continued its work to implement guidance around the use of AI, adopting five principles as the foundation of Responsible AI (Human-centric, Fairness, Transparency, Accountability, Robustness and safety; the principles are discussed in more detail in the BIP-Group White Paper published in May 2023). Other countries are planning regulations, like Australia, and other political bodies are seeking inputs on regulations, as is the case of the United States.

There are reasons to believe that the resulting global regulatory framework will become vast and demanding, especially for companies running their business in different geographical areas. In our view, adopting a robust AI Governance framework based on the principles of Responsible AI will be the best way to achieve regulatory compliance, while simultaneously ensuring a first line of defence against reputational and operational risks.

The importance of AI Governance

AI Governance refers to the set of policies, regulations, ethical guidelines, operating model, methodologies, and tools that manage and control the responsible development, deployment, and use of AI technologies within organizations.

AI governance mitigates risks possibly posed by Generative AI by setting clear guidelines and ethical principles for the development and deployment of AI systems, ensuring they adhere to fairness, transparency, and privacy standards. It establishes accountability mechanisms within organizations, making it easier to identify and rectify issues promptly. Additionally, AI governance involves ongoing monitoring and risk assessment, enabling organizations to proactively address emerging risks, thereby reducing the potential for harmful consequences associated with AI technologies.

To provide a few examples, adopting standards and guidelines for the development and deployment of Gen AI application can prevent the unintentional use of copyrighted information or sensitive personal information during the training or the utilization of the model. An appropriate model cataloguing can track down the AI application developed or deployed by an organization, and the related risks. Introducing metrics to monitor the fairness of an AI application can mitigate the risk of biases in the algorithm or harmful content generation.

In our view an AI Governance framework based on Responsible AI is an enabling factor for promoting AI adoption while safeguarding organizations against the regulatory, operational, and reputational risks.

BIP as a partner for AI Governance

BIP is conducting a series of initiatives to develop an all-encompassing strategy for AI Governance, aligning our resources, expertise, and service offer to guide and support our customers in managing their AI applications in an effective, valuable, and responsible manner:

- Defining a comprehensive AI Governance framework, that will enable our clients to translate into their organization and operational approaches the principles and ethical aspects of Responsible AI. Our framework revolves around key components, such as:

- Model cataloguing: creating a complete overview of the AI models, and the related risks, adopted by an organization.

- Organization and roles: to identify clear responsibilities to govern AI and ensure accountability around regulatory and ethical issues.

- Policies and standards: Responsible AI principles and technical standards to regulate the development and deployment of AI models.

- Processes: Standardize the development and control of AI Models

- Metrics: define metrics to measure dimensions that are relevant for Responsible AI, including technical performance (e.g., accuracy and precision) and ethical aspects (e.g., explainability, fairness).

- Develop tailored solutions for specific use cases. For instance Giulia Ometto, Management Consultant at BIP UK proposed an approach to mitigate the Gender Bias through “A multi-dimensional approach involving data, development practices, user feedback and regulatory measures to be adequately addressed.”

- Establish targeted partnerships to offer comprehensive solutions to our customers. For example, BIP and Credo AI have commenced a partnership to advance the widespread adoption of Responsible AI. The Credo AI platform supports the adoption of AI models and provides users with the means to monitor and manage risks across the AI lifecycle. It incorporates emerging regulatory requirements to ensure that organizations stay compliant with the necessary rules and standards and allows users to confidently adopt AI solutions that maximise return on investment whilst minimising risk.

References

- [1] Nicoletti, L., Bass, D. (2023). Human are biased. Generative AI is even worse. Bloomberg. https://www.bloomberg.com/graphics/2023-generative-ai-bias/

- [2] Leswing, K. (2023, February 14). Microsoft’s Bing A.I. made several factual errors in last week’s launch demo. CNBC. https://www.cnbc.com/2023/02/14/microsoft-bing-ai-made-several-errors-in-launch-demo-last-week-.html

- [3] Ars Technica (2023, March 21). AI-faked images of Donald Trump’s imagined arrest swirl on Twitter. https://arstechnica.com/tech-policy/2023/03/fake-ai-generated-images-imagining-donald-trumps-arrest-circulate-on-twitter/

- [4] Brittain, B. (2023, February 6). Getty Images lawsuit says Stability AI misused photos to train AI. Reuters. https://www.reuters.com/legal/getty-images-lawsuit-says-stability-ai-misused-photos-train-ai-2023-02-06/

- [5] Art Tecnica (2023, February 10) AI-powered Bing Chat spills its secrets via prompt injection attack. https://arstechnica.com/information-technology/2023/02/ai-powered-bing-chat-spills-its-secrets-via-prompt-injection-attack/

- [6] High-Level Expert Group on Artificial Intelligence, Ethics Guidelines for Trustworthy AI, 2019.

- [7] See 3.2, “Collection and use of expertise”, of the EU AI ACT.

- [8] See 1.1, “Reasons for and objectives of the proposal”, of the EU AI ACT.

- [9] See article 71, “Penalties”, of the EU AI ACT.

AUTHORS

Massimiliano Cimnaghi

Director Data & AI Governance

@BIP xTech

Israel Salgado

Data Scientist Expert

@BIP xTech

Would you like to know more?

Write to us by filling in this form and we will send you all the information you need.